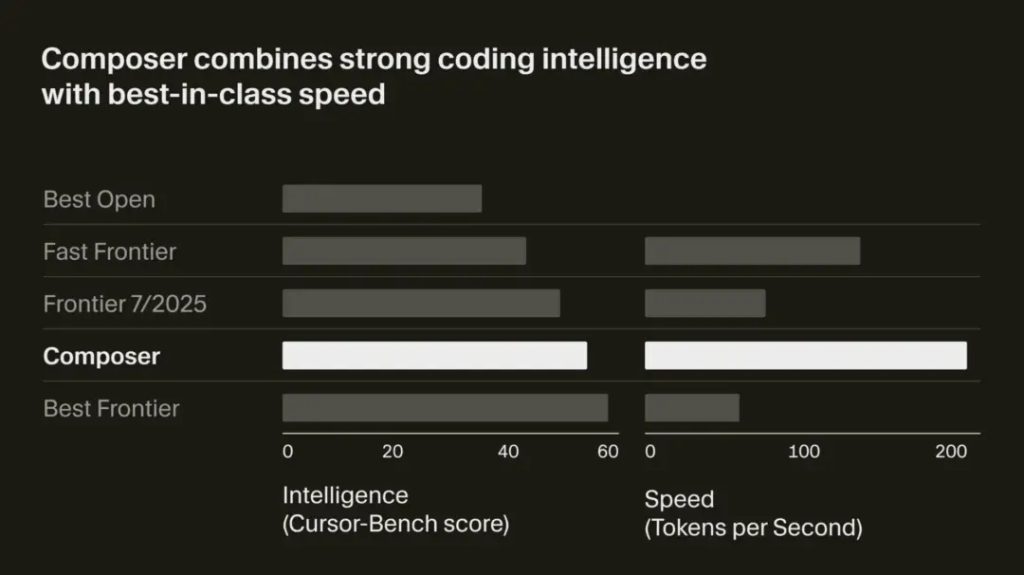

After long being labeled a “shell-based” tool, Cursor has finally launched its first self-developed coding model—Composer.

Composer operates at 4x the speed of comparable models. Cursor describes it as a model specifically built for low-latency intelligent coding, capable of completing most tasks in under 30 seconds.

In the “Speed” category, Composer reaches 200 Tokens per second.

Beyond the self-developed model, Cursor has restructured its interaction logic to introduce a multi-agent mode, allowing up to 8 agents to run in parallel with a single prompt. This feature uses git worktrees or remote machines to prevent file conflicts.

Version 2.0 also embeds a browser directly into the editor, a significant boon for front-end development. Users can select elements directly and forward DOM information to Cursor.

The update further introduces a new code review feature, making it easier to view all changes made by Agents across multiple files—no more switching back and forth between files.

An even bigger highlight is the new Voice Mode—enabling “coding by speaking” for real.

Additionally, improvements have been made to copy/paste prompts with contextual tags: many explicit items (such as @Definitions, @Web, @Link, @Recent Changes, @Linter Errors) have been removed from the context menu. Agents can now collect context independently, eliminating the need to manually attach it in prompt inputs.

Cursor’s Shift from “Shell Dependency” to Self-Developed Models

Cursor has long been criticized for being a “shell-based” tool. Despite being a company valued at $10 billion, it had never launched its own proprietary model.

In the past, Cursor was constrained by Claude and its pricing model, resulting in most of its revenue flowing to AI model providers like Claude. This reliance on external models not only limited the company’s independent innovation but also left it facing higher costs and narrower profit margins in the market.

The launch of Composer marks Cursor’s entry into the AI race with its own self-developed model. For any company scaling to a large size, developing proprietary models is an inevitable path. As netizens put it: “A $10 billion company cannot rely on a shell app as its moat forever.”

NVIDIA’s Endorsement at GTC 2025

At the just-launched GTC 2025 conference, Jensen Huang specifically highlighted Cursor:

“Here at NVIDIA, every software engineer uses Cursor. It’s like everyone’s coding partner—helping generate code and significantly boosting productivity.”

Extended Reading: Building a Coding Model with Reinforcement Learning

In the world of software development, speed and intelligence are eternal pursuits—and the user experience of Cursor Tab (Cursor’s self-developed autocomplete model) speaks volumes. Many industry leaders on X (formerly Twitter) have noted that Cursor Tab is their favorite feature, as it expects the model to instantly understand coding logic and complete coding quickly—a true “wanting both” scenario.

Developers often seek models that are both intelligent enough and support interactive use, to maintain focus and fluency during coding.

Cursor initially tested a prototype model codenamed “Cheetah”; Composer is a more intelligent upgrade of this prototype. It delivers sufficient speed to support interactive experiences, ensuring smooth and enjoyable coding. Balancing “speed” and “intelligence” is precisely Composer’s core goal.

Composer is a Mixture of Experts (MoE) language model that supports the generation and understanding of long contexts. It has been specially optimized for software engineering through reinforcement learning (RL) training in diverse development environments.

In each training iteration, the model receives a problem description and is instructed to produce the optimal response—whether it’s code modification, solution planning, or informative answers. The model can use simple tools (e.g., reading/editing files) or invoke more powerful capabilities (e.g., terminal commands, semantic search across entire codebases).

How Reinforcement Learning Optimizes Composer

Reinforcement learning enables targeted optimization of the model to better serve efficient software engineering:

- Since response speed is critical for interactive development, the model is encouraged to make efficient tool-selection decisions and maximize parallel processing where possible.

- The model is trained to be a more helpful assistant by reducing unnecessary responses and avoiding unfounded claims.

- During RL training, the model independently acquires useful capabilities, such as performing complex searches, fixing linter errors, and writing/executing unit tests.

Infrastructure for Training Large-Scale MoE Models

Efficiently training large MoE models requires substantial investment in infrastructure and systems research. Cursor built a custom training infrastructure based on PyTorch and Ray to support asynchronous reinforcement learning at scale.

By combining MXFP8 MoE kernels with expert parallelism and mixed-sharding data parallelism, the model is trained in native low precision—allowing training to scale to thousands of NVIDIA GPUs with extremely low communication overhead. Additionally, using MXFP8 training enables faster inference speeds without the need for post-training quantization.

Firsthand Testing of Composer

Shortly after updating Cursor to Version 2.0, we tested the model firsthand. Our initial reaction: it’s genuinely fast—nearly all prompts run in seconds, with results generated in as little as 10–20 seconds.

Test Cases & Results

- Replicating a macOS Webpage: We asked it to generate a webpage mimicking macOS, though the final result resembled a Linux interface.

- Simulating a Spacecraft Flight (Earth to Mars): The outcome was less polished.

- Front-End UI Generation: Composer showed strong strengths here—while logic didn’t always align perfectly, the generated page designs were impressive.

As seen in our screen recording, the generation speed was so fast that the index.html file couldn’t even be captured in time. We also referenced netizens’ speed test results, which confirmed this performance.

Another test: running multiple agents simultaneously—this feature worked smoothly as well.

Developer Feedback & Market Context

In our tests, we noticed that Cursor 2.0 downplays the role of external models. Under Composer, the available external models are Grok, DeepSeek, and K2—with all except Grok being open-source. Notably, GPT and Claude are no longer listed.

Early access developers shared mixed feedback:

- Many praised Composer’s speed but noted its intelligence lags behind Sonnet 4.5 and GPT-5.

- Some geeky developers preferred CLI (Command-Line Interface) over IDEs, stating that Cursor 2.0 hadn’t won their team over.

- Positive feedback focused on the multi-agent mode, with users noting it works exceptionally well in wide-screen mode.