Anonymous Reviewer Exposes Flaws in Peer Process

An anonymous AAAI 2026 peer reviewer’s viral Reddit post has ignited fierce debate over academic integrity, describing the review process as “the most chaotic” they’ve encountered. Strong papers were rejected, weak ones advanced, and suspected “relationship papers” sailed through—a system seemingly undermined by bias and inconsistency.

AI’s Growing Role Raises Concerns

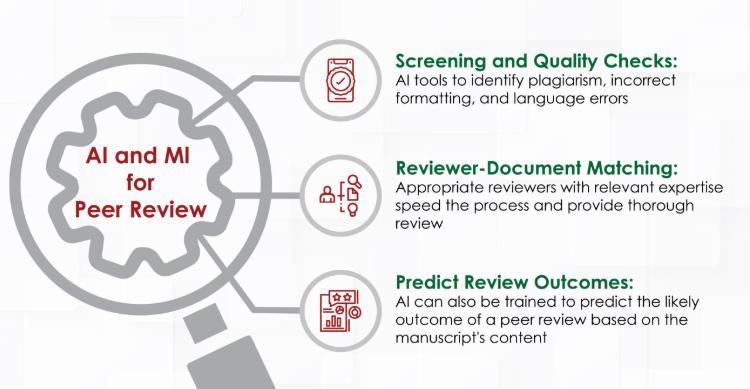

Adding to the controversy: AI now summarizes detailed reviewer critiques for Area Chairs (ACs), who often rely on these summaries to make final decisions. While AAAI 2026 claims AI is “only assistive,” reviewers worry its influence skews outcomes, turning peer review into a “summary bias” game. One Redditor bluntly stated, “Human judgment is being quietly replaced.”

Systemic Issues Surface

The reviewer highlighted systemic flaws: Phase 1 reviewers hold veto power, Phase 2 lacks context leading to inconsistent scores, and the official two-phase process feels like an “experiment in algorithmic governance.” Comments flooded in, with researchers sharing similar experiences and questioning the field’s future. “I’m quitting this field. I just can’t do it anymore,” one wrote.

Core Question: Can Fairness Survive?

The incident isn’t just about one conference—it reflects broader anxieties about AI’s role in academia. As algorithms summarize human judgment, the community faces an uncomfortable truth: If AI-generated summaries outweigh expert analysis, what remains of peer review? The reviewer’s closing line summed it up: “If this paper gets accepted, I might never review for AAAI again.” A sign not just of lost faith in a process, but in the trust that underpins it.